An In-Depth Look into Deployment Strategies: Pros, Cons, and the Role of Load Balancers and API Gateways

Software deployment is an intricate process involving all the activities that make a software system available. Several deployment strategies have evolved, each with unique characteristics, benefits, and drawbacks. This article deep into five techniques: Big Bang Deployment, Blue-Green Deployment, Canary Deployment, Feature Toggle Deployment, and A/B Testing Deployment. We’ll also discuss the importance of load balancers and API gateways in these strategies.

1. Big Bang Deployment

As the name suggests, Big Bang deployment involves deploying a new version of software or system simultaneously across all parts of the environment. It is one of the earliest and most straightforward deployment methodologies used in some specific contexts today. The idea is simple: you prepare the new version of the software, halt the current system, and replace it entirely with the new version.

Deployment Process

- Development and Testing: The new software or system is designed, coded, and extensively tested in an isolated environment. This process continues until the development team is confident that the new version is ready for deployment.

2. Deployment Preparation: Next, the necessary infrastructure and resources needed for the new version are prepared. This could involve setting up new servers, configuring databases, etc.

3. System Shutdown: The currently running version of the software is shut down. This can lead to downtime where the system is unavailable to users. This downtime can vary, from a few minutes to several hours, depending on the complexity of the software.

4. Deployment: The new version of the software is deployed across all parts of the environment.

5. Validation: After the new software is deployed, it is tested to ensure it’s functioning as expected in the live environment.

Pros and Cons

The Big Bang deployment strategy comes with several benefits and drawbacks that organizations should consider:

Pros:

- Simplicity: Since all components of the new system are deployed at once, there’s less complexity in managing multiple versions of the software.

- Complete Upgrade: With Big Bang, users are directly upgraded from the old version to the new one without experiencing the intermittent versions that other strategies like canary or blue-green deployments might entail.

Cons:

- High Risk: The impact could be massive if the new system has a critical bug or failure that wasn’t caught during testing. Every user would experience the problem, potentially leading to a complete system outage.

- Downtime: Since the entire system must be taken offline for deployment, it often leads to downtime. This can disrupt service availability and impact user experience.

- Difficult Rollback: If the new system fails, rolling back to the old system can be time-consuming and challenging, as it often involves another period of downtime.

Big Bang deployment is a viable strategy for simple systems or smaller user bases when downtime can be tolerated. However, strategies that minimize risk and downtime, like blue-green or canary deployments, are generally more desirable in today’s competitive and fast-paced environment.

2. Blue-Green Deployment

Blue-Green Deployment is a software release management strategy designed to reduce downtime and risk by running two identical production environments, Blue and Green.

Deployment Process

- Preparation: Two environments are set up, capable of running the application. At the start, Blue is the live environment running the current version of the software, while Green is idle.

- Green Deployment: The new version of the software is deployed to the Green environment, which is not currently serving user traffic.

3. Testing: Extensive testing is conducted on the Green environment to ensure the new software version functions as expected. This can include performance and security checks.

4. Routing Traffic: Once the Green environment is confirmed stable, the routing mechanism is adjusted to direct all incoming traffic from the Blue environment to the Green one. The Green environment is now live.

5. Blue Idle or Reset: The Blue environment, which is no longer live, can now be idled for potential rollback or reset for the next deployment.

Pros and Cons

Blue-Green deployment offers several advantages but also presents some challenges:

Pros:

- Zero-Downtime Deployment: Because the new version is released by simply switching environments, there is minimal to zero service downtime.

- Instant Rollback: If something goes wrong in the Green environment, you can quickly switch back to the Blue environment, thereby minimizing the impact of errors or bugs in the new version.

- Isolated Testing Environment: The idle environment (Blue or Green) can serve as a full-scale testing and staging environment, reducing the risk of undiscovered bugs in the live environment.

Cons:

- Requires Double Resources: This strategy requires maintaining two production environments, doubling infrastructure and resource requirements.

- Data Synchronization Issues: Any data changes in the Blue environment (like user-generated data) during the Green deployment and testing phase must be synchronized in the Green environment. This can be challenging and complex, especially for large applications.

- Manual Intervention: Depending on the application and infrastructure, switching traffic from Blue to Green might require manual intervention, potentially leading to human errors.

Blue-Green deployment is an effective strategy for continuous deployment, ideal for services where uptime is critical. It allows for safe testing, validation, and quick rollback if necessary. However, organizations must be ready to manage the resource requirements and data synchronization challenges this method introduces.

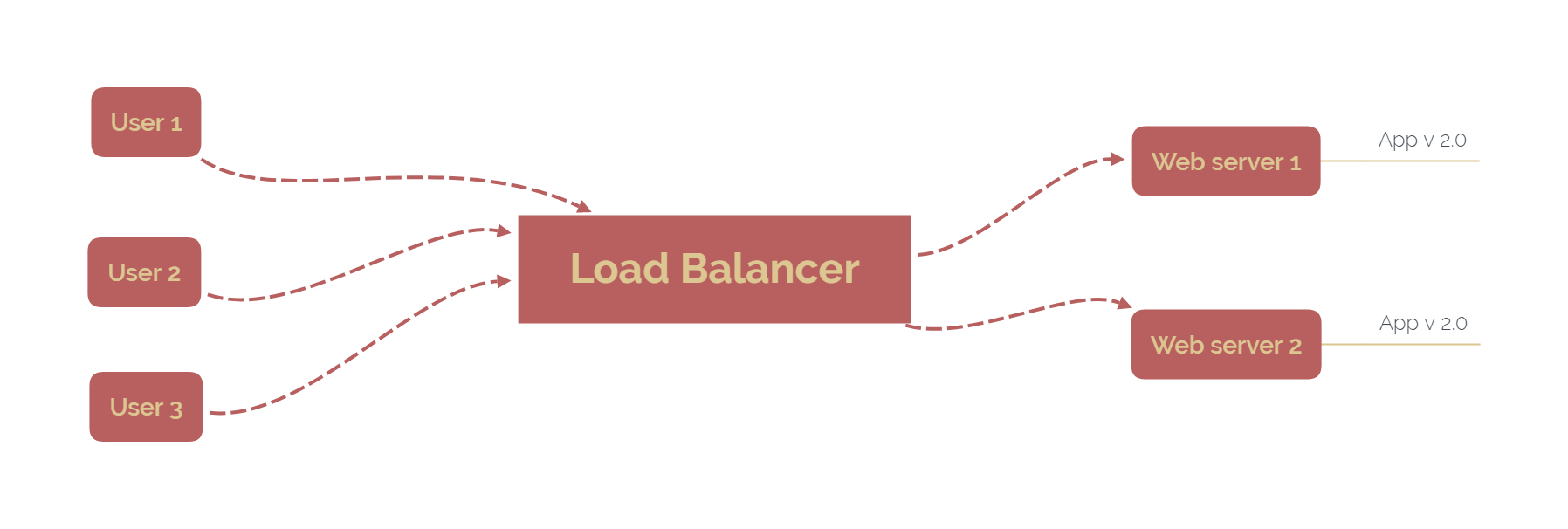

3. Canary Deployment

Canary Deployment is a software release management strategy named after the “canary in a coal mine” concept. Canaries were historically used in coal mining to alert miners to toxic gases. Similarly, a Canary Deployment aims to detect potential issues with a new software version by exposing it to a small, controlled group of users.

Deployment Process

- Initial Release: The new version of the software is deployed to a small subset of servers or containers, and a corresponding proportion of user traffic is routed to this new version. The users in this phase act as the ‘canaries’.

- Monitoring: The new version’s performance is closely monitored to identify issues or bugs. This can involve tracking error rates, response times, resource usage, and gathering direct user feedback.

- Analysis and Decision: If the new version performs well and no critical issues are found, it’s gradually rolled out to more users. If issues are identified, the deployment can be halted, and the affected users can be rerouted to the older version.

4. Full Rollout: This process of expanding the user base for the new version continues until it’s fully rolled out and all users are directed to it.

Pros and Cons

Canary Deployment offers several advantages but also has its challenges:

Pros:

- Reduced Risk: Initially releasing the new version to a small user group reduces the risk of a wide-scale problem. If a critical issue arises, it only affects a small portion of the user base.

- Real-World User Testing: Canary releases allow for real-world testing and user feedback before a full-scale deployment, which can provide insights that may not be evident from internal testing.

- Gradual Resource Scaling: This strategy enables gradual scaling of resources with the new version, reducing the risk of resource-related issues that can occur with sudden, large-scale deployments.

Cons:

- Complexity: Managing and monitoring different software versions for user subsets can be complex and require sophisticated orchestration and monitoring tools.

- Inconsistent User Experience: Different users may have different experiences during the rollout period, as some are on the new version while others are on the old one.

- Extended Rollout Period: Depending on the user base size and the monitoring period for each rollout phase, full deployment can take longer compared to other methods.

Canary Deployment is an effective strategy for minimizing risk during deployment, especially for large-scale, user-facing applications. However, it requires a mature infrastructure and tooling to manage and monitor effectively.

4. Rollback Deployment

A rollback is a deployment strategy that involves reverting the system to a previous, stable state following a failed deployment or detected issue with a new release.

Deployment Process

- Baseline State: The software’s current stable state is stored as a baseline before deploying a new release. This includes both the software version and any related data or configuration states.

- Deploy: The new version of the software is deployed.

- Monitor: Following the deployment. The system is closely monitored. This can involve automated tests, user feedback, or system performance metrics.

- Issue Detection: If a critical issue is detected, a decision is made to perform a rollback.

- Rollback: The system is reverted to its previous state using the stored baseline. This may involve reverting software changes, configuration changes, or even data (if feasible and applicable).

Pros and Cons

Like any strategy, rollback deployment has its strengths and potential challenges:

Pros:

- Risk Mitigation: A rollback strategy can reduce the risk of a new release by providing a ‘safety net’ if critical issues are discovered. This can prevent prolonged periods of system downtime or broken functionality.

- Quick Recovery: When an issue is detected, a rollback can be initiated immediately, ensuring rapid recovery to a known, stable state.

Cons:

- Data Inconsistency: Data may have complications when rolling back depending on the changes made in the new release. For example, if the new release included database schema changes, it might not be possible to revert the data to its previous state.

- Resource-Intensive: Creating, storing, and managing the baselines needed for rollback can be resource-intensive. This might not be feasible for all organizations or applications.

In the grand scheme of the deployment process, rollback should be seen as an insurance policy rather than a strategy. It is a measure of last resort when other strategies like Blue-Green, Canary, or Feature Toggle fail to mitigate risks effectively. Thus, it could be placed in the article after explaining these strategies, possibly between the Feature Toggle Deployment and A/B Testing Deployment sections. It’s crucial to note that while rollback deployment is an essential part of a deployment strategy, the primary focus should be on robust testing and monitoring processes to catch issues before deployment or, in the case of strategies like Canary and Blue-Green, during the early stages of release.

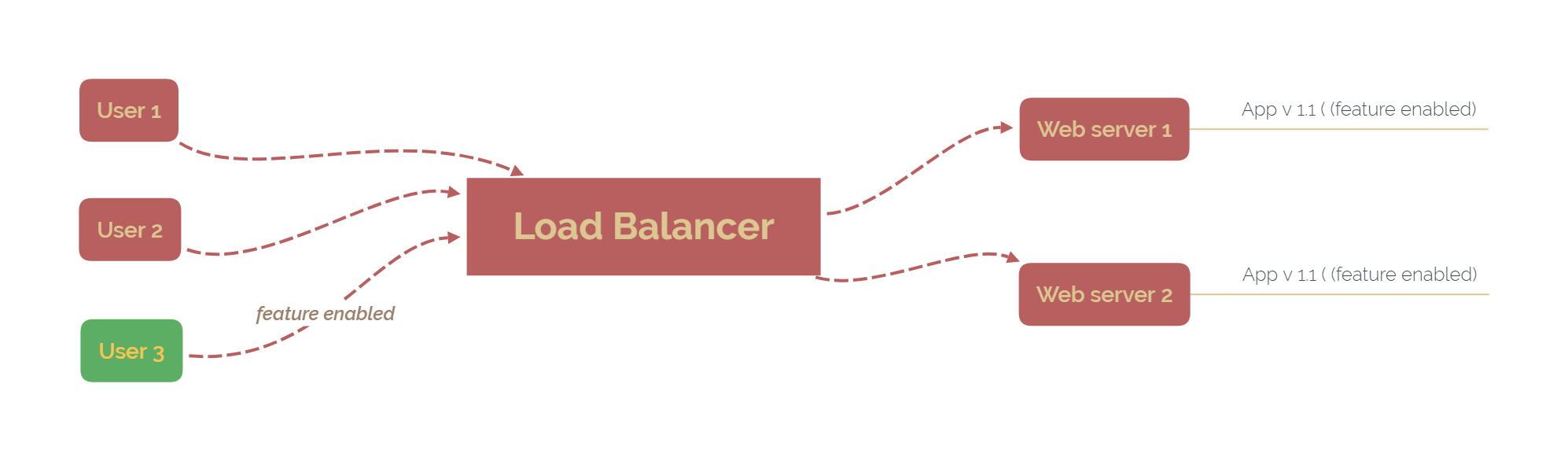

5. Feature Toggle Deployment

Feature Toggle Deployment (also known as Feature Flags) is a technique in software development that allows teams to modify a system’s behavior without changing code. It provides the ability to switch on or off certain functionalities of an application, allowing developers to release, hide, enable, or disable features in production.

Deployment Process

- Toggle Implementation: Developers introduce toggles in the code to enable or disable certain features. These toggles can be controlled externally without the need for additional code changes.

- Deployment: The application is deployed with the new features ‘hidden’ or ‘disabled’ by the toggles. Since these features are not accessible to users, they do not affect the application’s functioning.

- Toggle Activation: When the team decides to release a new feature, the corresponding toggle is ’enabled’. The feature becomes available to users without the need for a new deployment.

- Monitoring and Feedback: Its performance and user feedback are monitored once the feature is enabled. If any issues are found, the feature can be ‘disabled’ instantly using the toggle.

- Toggle Cleanup: After a feature is stable and no longer needs to be hidden or toggled, the corresponding toggle code should be removed during future development cycles to avoid code clutter.

Pros and Cons

Feature Toggle Deployment has many advantages but also poses some challenges:

Pros:

- Reduced Risk: Toggles reduce risk by providing the ability to ‘hide’ new features until they’re ready and ‘disable’ them instantly if issues are found in production.

- Flexibility: Toggles allow the release of features according to business timelines or events, independent of deployment schedules.

- Testing in Production: Feature flags can be used to perform canary or A/B testing in the production environment, providing real-world insights before a full-scale release.

Cons:

- Code Complexity: If not managed properly, feature toggles can lead to a more complex codebase, as the application must handle both ’enabled’ and ‘disabled’ states for each toggle.

- Technical Debt: Toggles are no longer needed and must be removed promptly, or they can contribute to technical debt. If forgotten, they could lead to redundant code and unnecessary condition checks.

- Dependency Challenges: Complex dependencies between different feature flags can lead to unexpected application behaviors, especially when certain features are enabled or disabled.

Feature Toggle Deployment is a powerful strategy that provides flexibility and risk mitigation in releasing new features. However, it requires robust management practices to avoid increased code complexity and technical debt.

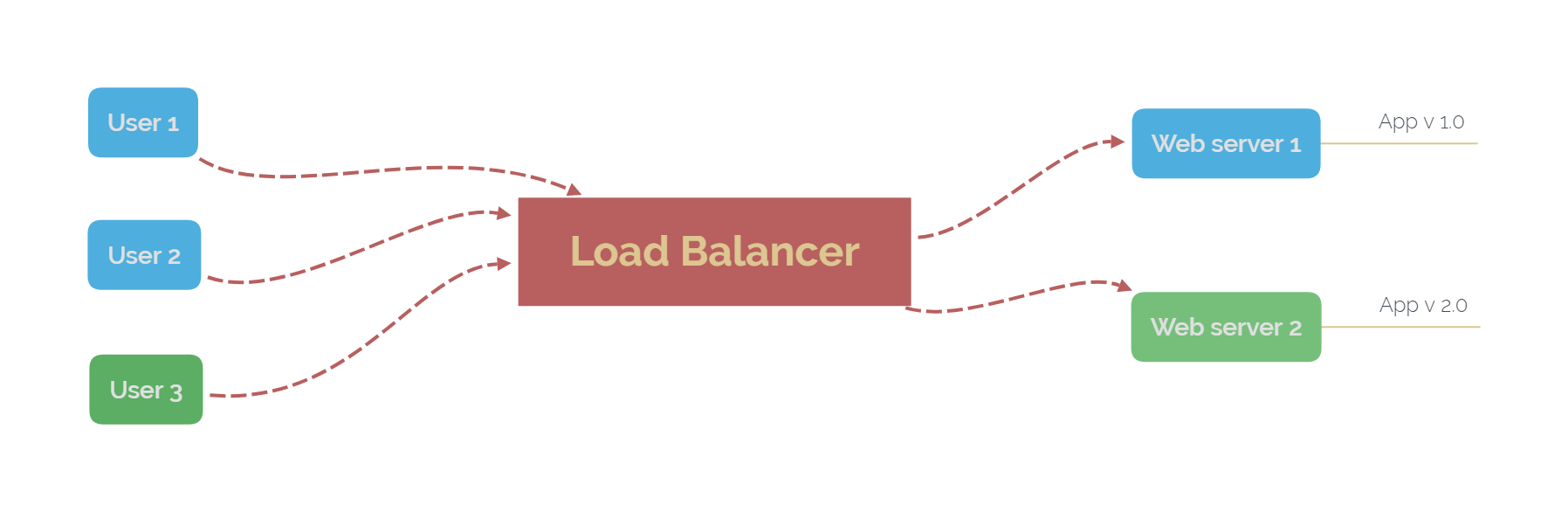

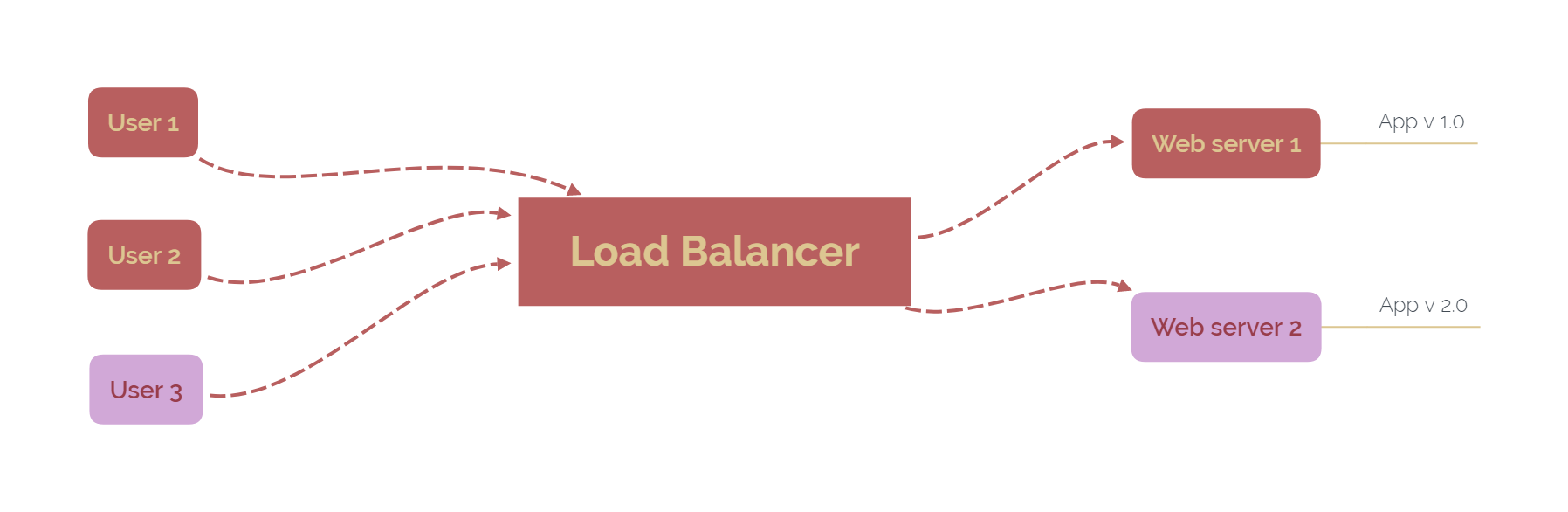

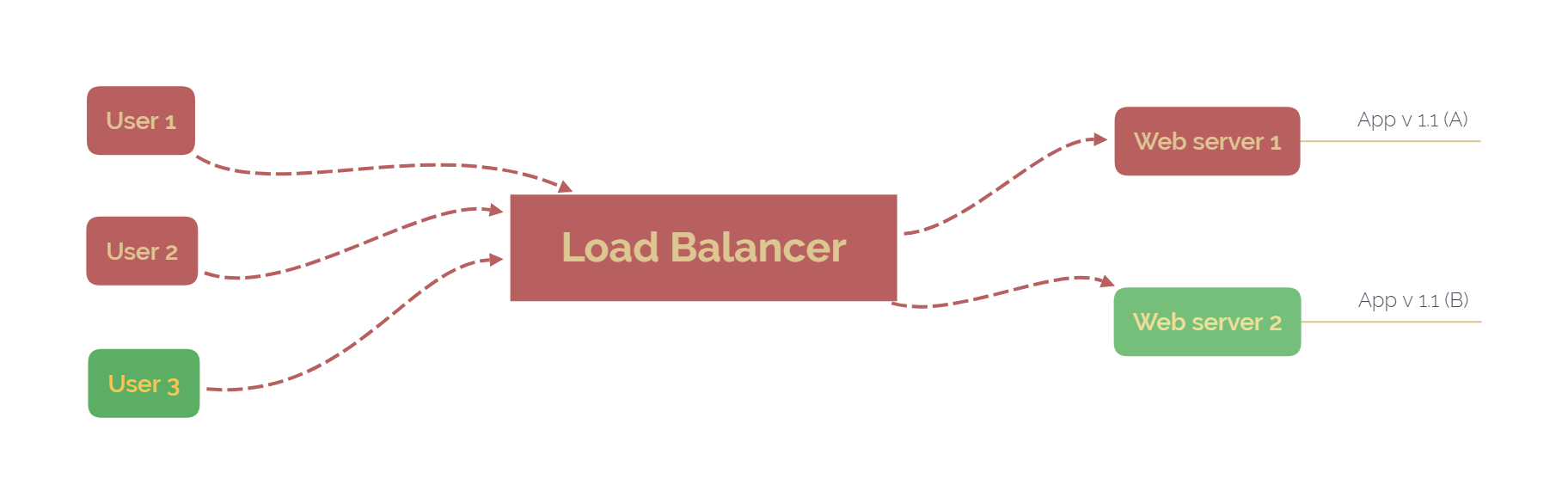

6. A/B Testing Deployment

A/B testing, also known as split testing, compares two versions of an application or web page to determine which one performs better. While it’s commonly associated with marketing and web design for testing user interface and user experience changes, it’s also used in software deployment to test new features, workflows, or algorithms.

Deployment Process

- Hypothesis Formation: Identify a feature or aspect you want to improve and create two different versions: ‘A’ (the control, usually the current version) and ‘B’ (the variant which contains the changes or new features).

- Split User Base: Your user base is randomly divided into two groups. Group one interacts with version ‘A’ and group two with version ‘B’.

- Monitoring and Data Collection: User interactions and key metrics are monitored across both versions. Metrics collected may include conversion rates, bounce rates, session duration, error rates, and other relevant KPIs.

- Analysis: Once enough data has been collected, an analysis is performed to determine which version achieved the desired results more effectively.

- Rollout or Iteration: If version ‘B’ shows a clear improvement over ‘A’, the changes are usually rolled out to all users. If no clear winner or ‘B’ performs worse, the insights gained can guide further iterations and tests.

Pros and Cons

A/B testing deployments have their strengths and challenges:

Pros:

- Data-Driven Decisions: A/B testing provides concrete data on how changes impact user behavior, leading to more informed decisions about which features or changes to implement.

- Risk Mitigation: By testing changes with a small group of users before a full rollout, you can catch potential issues early and reduce the risk of negatively impacting the entire user base.

- Enhanced User Experience: Over time, iterative A/B testing can lead to a highly optimized application that offers a superior user experience and meets business goals.

Cons:

- Time-Consuming: Designing, implementing, and analyzing A/B tests can be time-consuming. It’s not always the fastest way to get changes or new features in front of users.

- Requires Significant Traffic: Many users must interact with both versions to get statistically significant results from an A/B test. This can be a limitation for applications with a smaller user base.

- Multiple Variables: If version ‘B’ has multiple changes compared to ‘A’, it can be challenging to determine which specific change led to a difference in performance.

A/B testing is a powerful deployment strategy that can help teams understand how specific changes impact user behavior and application performance. However, it requires careful planning and execution and a data-driven approach to product development.

Load Balancers and API Gateways: Key Elements in Deployments

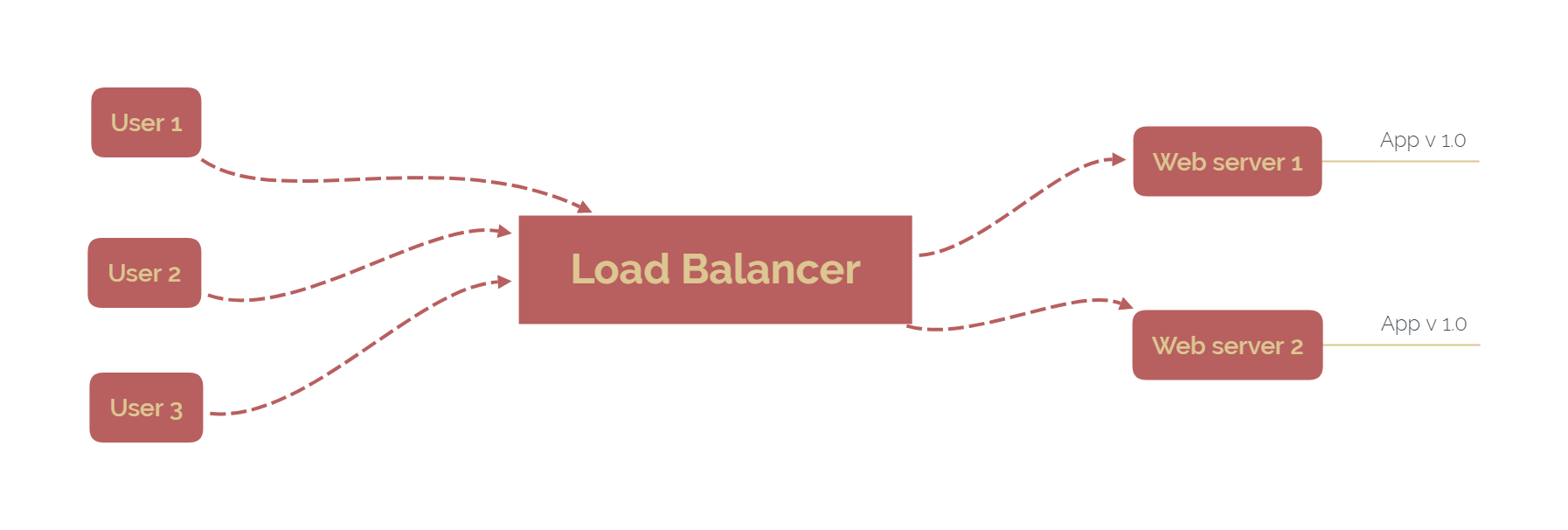

Load Balancers

A load balancer is a crucial component of any high-availability infrastructure. It’s a device that distributes network or application traffic across many servers or clusters to optimize resource use, minimize response time, and avoid overloading any single server.

Load balancers play a crucial role in deployments:

- Traffic Distribution: Load balancers ensure that no single server bears too much load, efficiently distributing incoming network traffic across multiple servers. This is particularly important during high-traffic periods or when a new version of an application is deployed, and user interactions are high.

- High Availability and Redundancy: By distributing the load among multiple servers, load balancers can provide additional layers of availability and redundancy. If one server fails, the load balancer redirects traffic to the remaining online servers.

- Performance Improvement: By balancing request loads, load balancers help reduce individual server loads and prevent one server from becoming a bottleneck, improving overall application response time.

- Scalability: Load balancers allow applications to scale more effectively. As the volume of traffic increases, additional servers can be added to the load balancer pool, providing a seamless scaling experience.

API Gateways

An API Gateway is a server that acts as an API front-end, receiving API requests, enforcing throttling and security policies, passing requests to the back-end service, and then passing the response back to the invoker.

API Gateways have a crucial role in deployments:

- Routing: API Gateways handle routing requests to the correct services. This becomes particularly important in microservices architectures or canary deployments, where different API requests might need to be routed to different service versions.

- Abstraction and Decoupling: API Gateways can decouple the client from your system’s backend services, allowing each to evolve independently. This abstraction can simplify client interactions, mainly when deployments result in service changes.

- Security: API Gateways often handle critical security tasks, such as API key validation, rate limiting to prevent DDoS attacks, and authentication and authorization.

- Consolidation: In deployments involving multiple microservices, the API Gateway can consolidate the responses from several services and return them to the client as a single response, simplifying client-side handling.

Load balancers and API Gateways are critical components in modern deployments. Load balancers ensure the efficient distribution of network traffic for optimal performance and enhanced scalability, while API Gateways manage and secure the interface between clients and back-end services, improving the manageability and security of the system.

Best Practices in CI/CD

The software deployments strategies, such as Big Bang, Blue-Green, Canary, Feature Toggle, and A/B Testing Deployment, can be incorporated into the Continuous Integration/Continuous Deployment (CI/CD) process to enhance software development workflow, release management, and risk mitigation. Let’s examine how each can fit into the CI/CD process.

- Big Bang Deployment: While this strategy may not align well with the principles of CI/CD, as it involves a complete replacement of the system at once, it can still be integrated into the CI/CD pipeline. The development team would commit and merge changes into the main branch throughout development. When the time for release comes, CI/CD would facilitate the automated building and testing of the software, followed by the shutdown of the old version and the startup of the new version.

- Blue-Green Deployment: Blue-green deployments can be automated within a CI/CD pipeline. A CD tool can deploy the new version to the idle environment after integration, where the new code is merged and tested (Green). Post-deployment tests can be run in this environment, and if successful, the tool can switch the load balancer to direct traffic to the Green environment.

- Canary Deployment: Canary deployments can also be incorporated into CI/CD. Once the new code is integrated and tested, the CD tool can deploy the new version to a subset of the production environment (Canary). Monitoring tools can then measure the performance of the Canary version. If it meets the defined criteria, the CD tool can gradually roll out the new version to the rest of the environment.

- Feature Toggle Deployment: Feature toggles can be implemented in CI/CD by adding toggles to the codebase. When a new feature is ready to be tested or released, the corresponding toggle is switched on in the appropriate environment (testing, staging, or production). The CI/CD pipeline ensures automated building, testing, and deployment of the software with the toggled features.

- A/B Testing Deployment: Implementing A/B testing in a CI/CD pipeline involves deploying two application versions to separate environments. Automated tests run on both, and performance data is collected. Analysis of this data can then be used to decide which version is promoted to production. This strategy can be combined with feature toggles to control which users see which features.

Deployment strategies can and should be integrated into the CI/CD process to enhance efficiency, reduce risks, and ensure high-quality software delivery. The best approach depends on your team’s needs, resources, and risk tolerance.

Conclusion

In conclusion, selecting an appropriate deployment strategy is influenced by various factors such as software intricacy, user base scale and geography, the potential implications of system unavailability, and available resources. Additionally, integrating these strategies within the CI/CD pipeline enhances software delivery’s efficiency and risk management. Leveraging the capabilities of load balancers and API gateways further fortifies the deployment process, ensuring efficient traffic distribution, enhanced scalability, and improved interface management between clients and back-end services. Thus, a well-chosen deployment strategy coupled with effective use of these technologies allows for seamless and robust deployments, significantly minimizing disruption and mitigating risks.